What if we apply single-effect Poisson regression alternatively? Let’s consider this parameterization of Poisson distribution: Previously, we introduced (triplets for each gene , cell , and factor ) to derive closed-form variational update equations. But the overall complexity can increase in a cubic time. Can we factorize into two parts, like ? It will certainly lead to a more restrictive approximation objective. Perhaps, the lower-bound induced by the factored auxiliary variables will be not as tight as before. Still, we can show that some sort of alternating regression algorithm can benefit from having two factored auxiliary variables.

Let us define latent variables, and under variational approximation:

Regression 1: Approximation from the rows’ perspective

We want to estimate given .

Find the most correlated factor (softly).

with , and

Regression 2: Approximation from the columns’ perspective

where , and

As a result, we will maximize the following lower-bound:

Example

## user system elapsed

## 206.659 327.108 41.356

u.order <- order(apply(uu, 1, which.max))

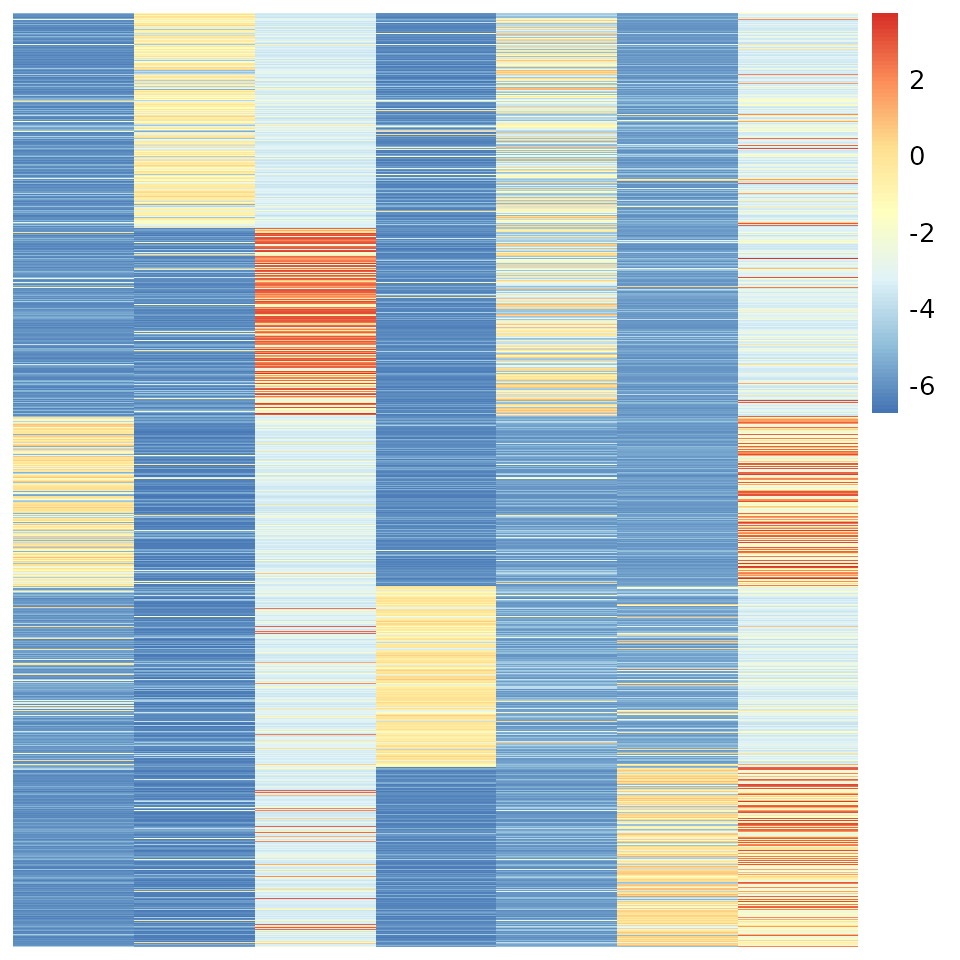

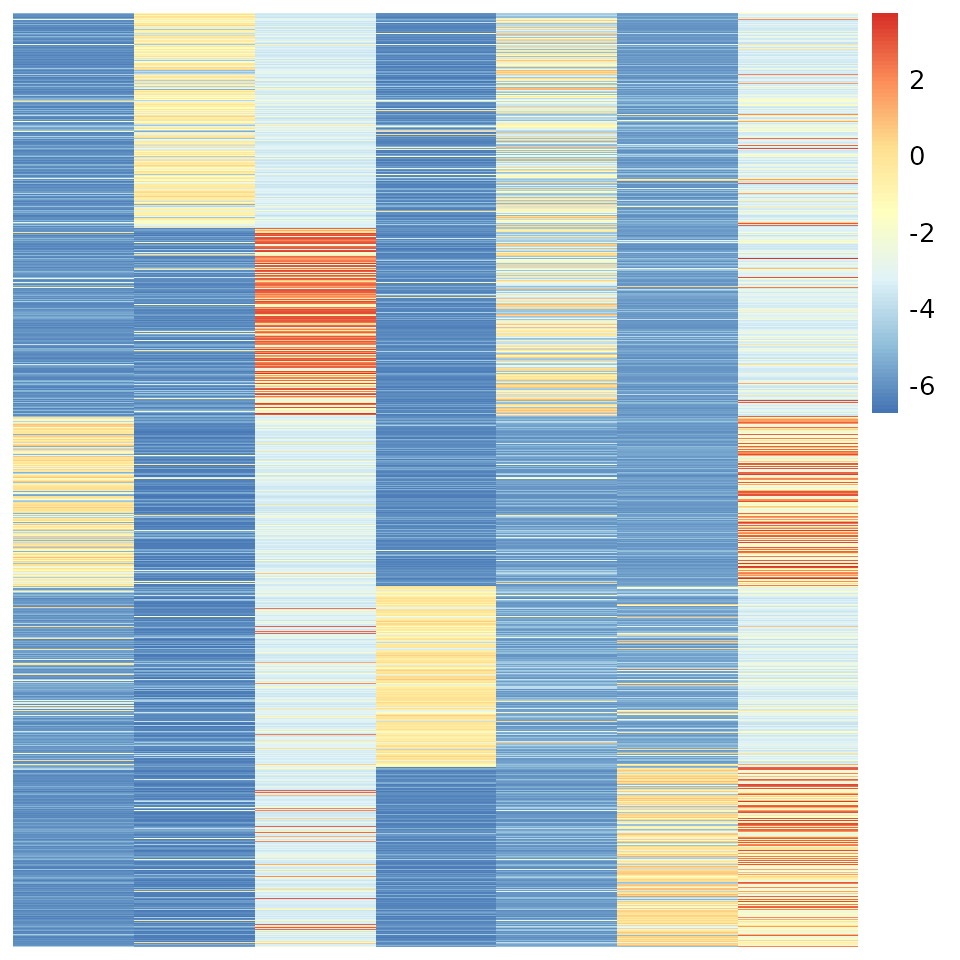

pheatmap(out$log.beta[u.order, ], Rowv=NA, Colv=NA, cluster_rows = F, cluster_cols = F)

v.order <- order(apply(vv, 1, which.max))

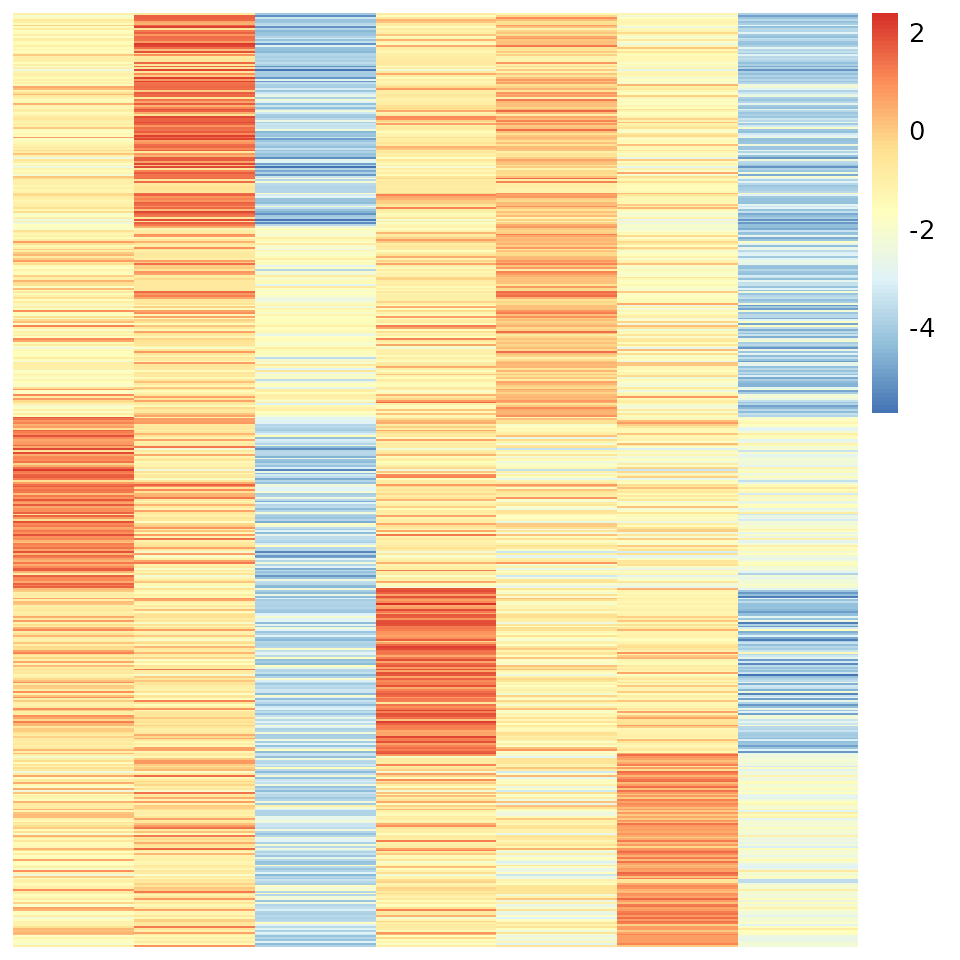

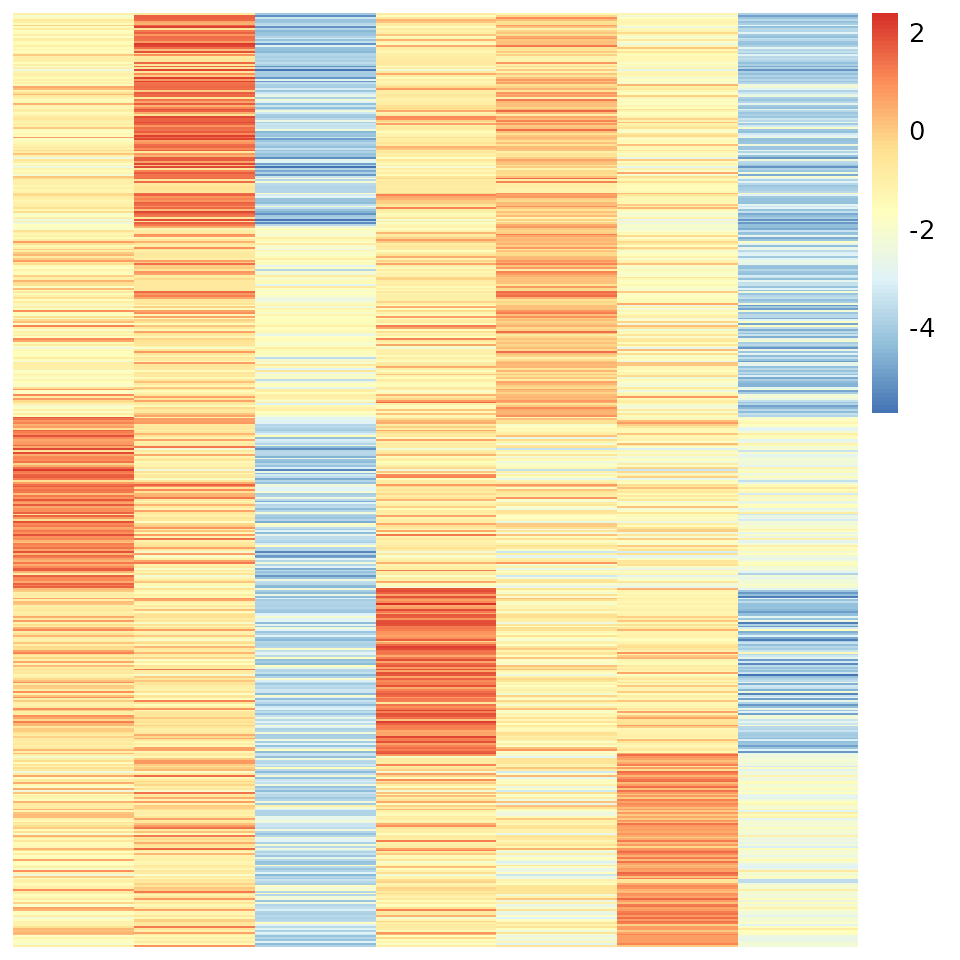

pheatmap(out$log.theta[v.order, ], Rowv=NA, Colv=NA, cluster_rows = F, cluster_cols = F)